Shipping faster with CircleCI pipelines and the Rainforest Orb

This post was originally published on the Rainforest Engineering blog. You can view the original post here.

———

Rainforest test failures fall into two broad categories based on what must be done to resolve the failure.

The first category is bugs: your regression test caught a regression! To fix it, you will need to change some code. The second category is “non-bugs”: everything else. Your test might need to be updated, your QA environment might have been misconfigured, or it might just be a flaky failure. For these failures, you should be able to eventually rerun the test and get it to pass without changing any application code.

When fixing a bug, you’ll want to rerun the full suite to ensure that while fixing one regression, you did not introduce another. But if you aren’t changing any code, there is no point in rerunning all the tests that just passed. You’ll just want to rerun the ones that failed and not waste time waiting for tests you know will pass to complete your release.

This is very similar to the kind of failures you can expect in a CI build. Your unit tests might catch a bug, requiring a code change and a brand new build. Or your deploy job might fail because of a network glitch, and you just want to rerun that individual CI job without rerunning all the other jobs in the build.

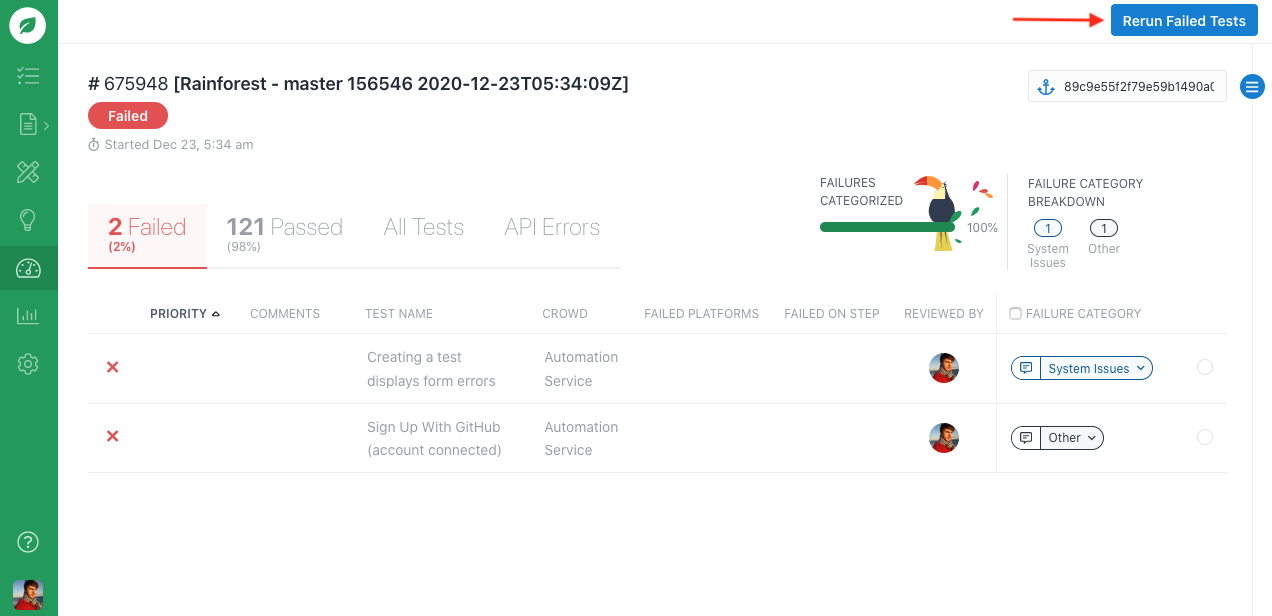

Limitations of the “Rerun failed tests” button

Rainforest has had a “Rerun failed tests” button in our web UI for many years now which did just this, but that button is not useful if you are kicking off your Rainforest runs from a CI environment. You’ll see the reran test pass, but since the run was initialized outside of your CI environment, it won’t know about the run and its result—and your release will remain blocked.

Additional consequences of rerunning all tests

Needing to rerun all tests whenever there’s a failure has a larger impact on release time than appears at first glance. Flaky tests that may have passed the first time around might fail in the rerun, requiring a third run to be kicked off. And if you know ahead of time that a test will fail, you will need to supervise the build to interrupt it after it has deployed to your QA environment, but before the run is triggered—so you can edit the test in question before resuming the build. This was becoming an issue for us internally—our engineers were spending more time supervising and waiting on releases, time that would have been better spent doing something more interesting.

The Rainforest release workflow

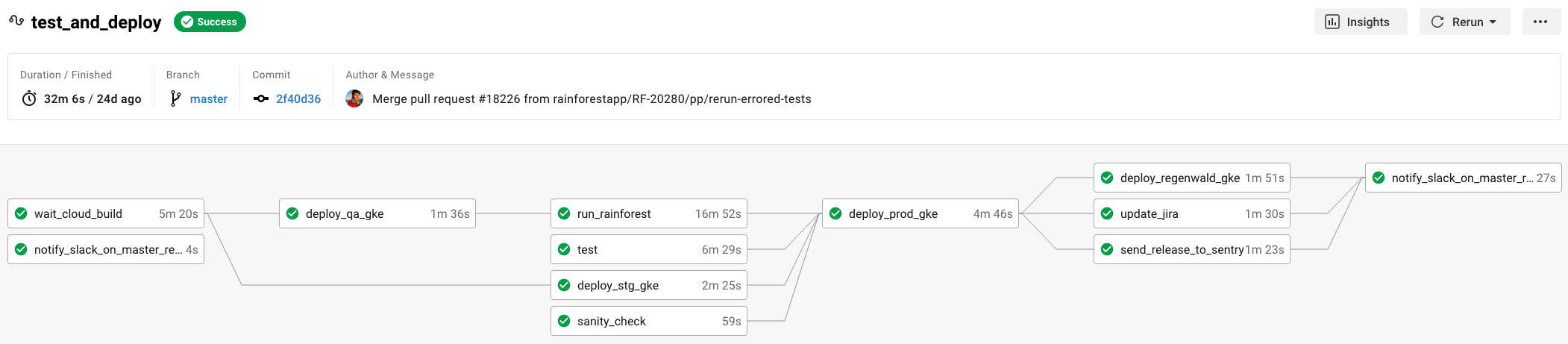

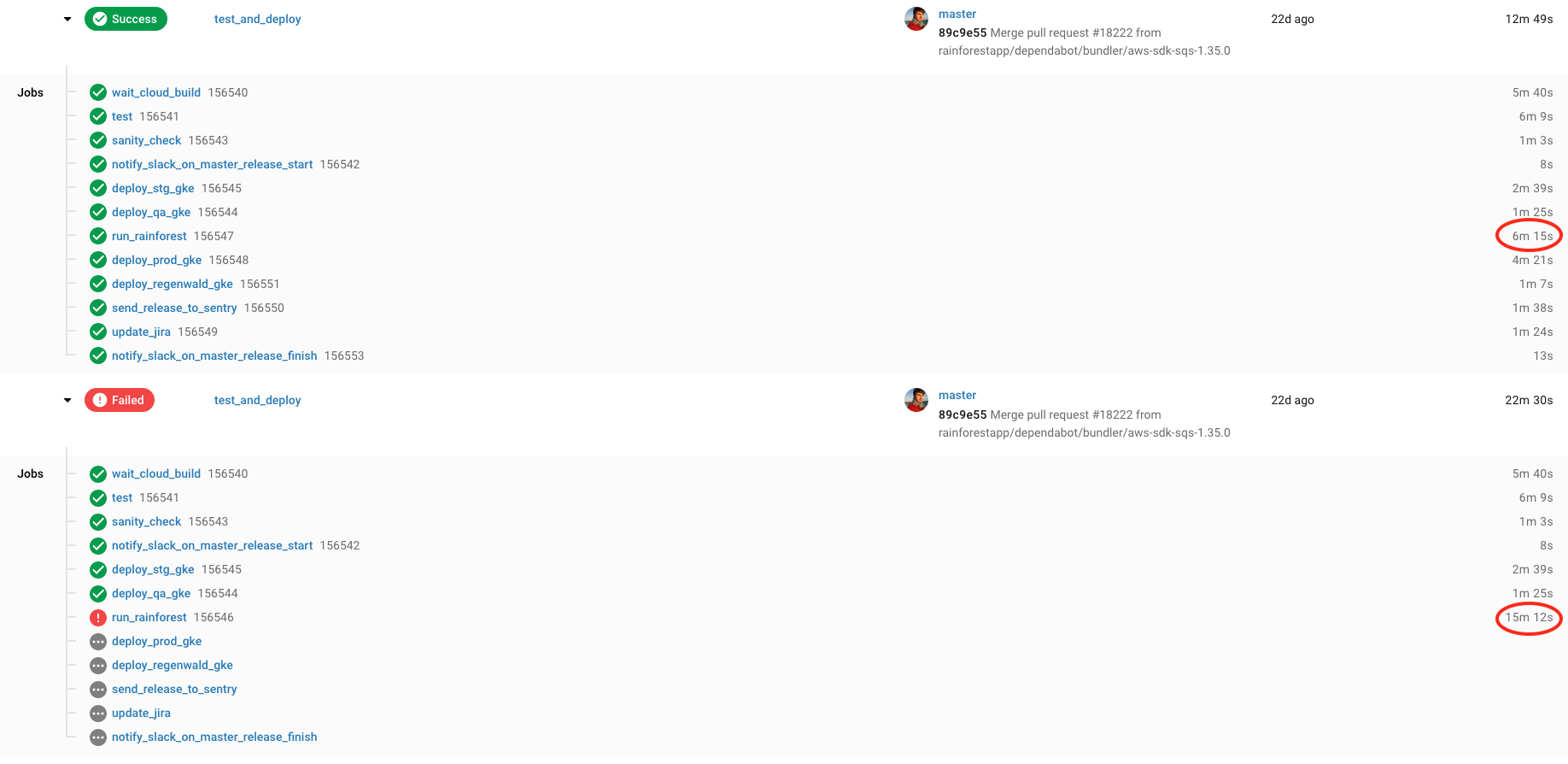

To ship a change, we have a manual PR review, merge to master, and the rest is managed automatically via CI/CD—including running Rainforest tests, which are kicked off via our CircleCI Orb. When the Rainforest run fails (the run_rainforest job in the workflow diagram below), we review the results. If all test failures were due to non-bugs, then once the failures have been resolved (e.g. outdated tests have been updated) we click the “Rerun workflow from failed” button in CircleCI’s web interface to resume our release without having to rerun our unit tests or redeploy to our QA environment.

In its original release, the Rainforest Orb was just a simple wrapper around our CLI’s run command. Rerunning the failed workflow meant rerunning the full Rainforest test suite and needlessly delaying our release. We changed this in our v2 release: when rerunning a failed CircleCI workflow with a failed Rainforest run only the failed Rainforest tests will be rerun.

Digging into the v2 code

To understand how this works, there are a couple of CircleCI concepts to figure out: pipelines and caching. In short, pipelines are a way of grouping workflows in CircleCI. If you rerun a failed workflow, that second workflow will be in the same pipeline. If you push a change to trigger a new workflow, that will be in its own pipeline. Using pipelines gives you access to a number of built-in pipeline variables, notably an id variable to know which pipeline we are currently in.

Caching is how CircleCI allows you to persist data across workflows. There are two pieces of data that we need to store when a run fails: the CircleCI pipeline ID and the Rainforest run ID. The save_run_id command does this, saving the run ID in a file named with the pipeline ID, which by default runs when the job has failed. The Rainforest run ID is itself obtained by parsing the JUnit results file created by our CLI once the run has completed.

- run:

name: Save Pipeline and RF Run ID

command: |

mkdir ~/pipeline

xmllint --xpath "string(testsuite/@id)" ~/results/rainforest/results.xml > ~/pipeline/<< parameters.pipeline_id >>

The next step is to then persist that file to the cache, using CircleCI’s built-in save_cache step:

- save_cache:

when: on_fail

key: rainforest-run-{{ .Revision }}-{{ .BuildNum }}

paths:

- ~/pipeline

If you scroll up, you’ll notice that restoring this cache was the first step in this job:

- restore_cache:

keys:

- rainforest-run-{{ .Revision }}-{{ .BuildNum }}

- rainforest-run-{{ .Revision }}-

This means we can now check in the core run_qa command if the current pipeline already had a Rainforest run executed, in which case we’ll try to rerun it rather than create a new run:

# Check for rerun

if [ -n "<< parameters.pipeline_id >>" ] && [ -s ~/pipeline/<< parameters.pipeline_id >> ] ; then

export RAINFOREST_RUN_ID=$(cat ~/pipeline/<< parameters.pipeline_id >>)

echo "Rerunning Run ${RAINFOREST_RUN_ID}"

if ! << parameters.dry_run >> ; then

# Create the rerun

rainforest-cli rerun ...

Since releasing this new feature, we’ve saved untold hours from our releases. Are you a Rainforest customer using CircleCI for your release process? Add our Orb to your CircleCI configuration file to take advantage of this and quicken your releases. Not using CircleCI? We’re working on integrations for other CI/CD platforms—let us know if one would be helpful for your setup.

Making git history great again

My previous post covered how to write bitesize commits (spoiler: use git add -p).

$ git add -p foo.rb # only add bug fix

$ git commit -m "Fix qux bug"

$ git add -p foo.rb # add remaining spacing fixes

$ git commit -m "Clean up spacing"And there you go, a very clean git history! But what if you notice another spacing error after you’ve already commited?

$ git add -p foo.rb # add forgotten spacing fix

$ git commit -m "More spacing fixes"Ugly. Let’s try that again.

$ git add -p foo.rb # add forgotten spacing fix

$ git commit --amend --no-editMuch better. The --amend option adds your changes to the latest commit. The --no-edit option means it’ll keep the original commit message. Congrats, you’ve rewritten history!

It’s not always so easy though. Let’s say that after running all tests, you realize your bugfix broke one obscure test you didn’t even know existed. You fix that test to pass, and then what?

$ git add -p test_bar.rb # add fix to old test

$ git commit -m "Fix old test"Ugly. There’s one more step you need to do.

git rebase -i

What we want to do is rewind history, skip to before that spacing fix commit, and then amend our test fix changes into the original bugfix commit. git rebase -i lets us do that.

The naïve way of doing this would be to stash our changes (rather than commit them), and then opt to edit the bug fix commit:

$ git add -p test_bar.rb # add fix to old test

$ git stash

$ git rebase -i @~1 # will bring up the last two commitsThis will bring the interactive rebase editor:

pick abc1234 Fix qux bug

pick 567defg Clean up spacingSince we want to change the first commit, we replace that first pick with edit and save out of the editor. That will start the rebase, pausing after the first commit.

$ git stash pop

$ git commit --amend --no-edit

$ git rebase --continueThat works, but there’s a better way of doing it. Rather than dealing with the stash and interrupting the rebase, we can use another tool from the interactive rebase editor: fixup. Fixup squashes a commit into the previous one, discarding its commit message. Back to our example:

$ git add -p test_bar.rb # add fix to old test

$ git commit -m "whatever I'm getting squashed anyways"

$ git rebase -i @~2 # we have an extra commit this time aroundBack to the interactive rebase editor:

pick abc1234 Fix qux bug

pick 567defg Clean up spacing

pick hij890k whatever I'm getting squashed anywaysThis time, let’s move the last commit to be behind the first one, and replace pick with fixup:

pick abc1234 Fix qux bug

fixup hij890k whatever I'm getting squashed anyways

pick 567defg Clean up spacingSave out, and the rebase will autorun to completion[1]. This gives us the same result as the stash strategy, but it still feels like a little too much work.

We can shave off part of the work by using git rebase’s --autosquash flag. That flag looks for specially formatted commits in order to prepare the rebase for you. Back to our example:

$ git add -p test_bar.rb # add fix to old test

$ git commit -m "fixup! Fix qux bug"

$ git rebase -i @~2And now the editor opens up with our commits in the desired order and with the desired actions:

pick abc1234 Fix qux bug

fixup hij890k whatever I'm getting squashed anyways

pick 567defg Clean up spacingYou can instantly save out, and the rebase will run. This saves us work in the interactive rebase editor, but now we need to worry about properly typing out our throwaway commit’s message. Surely there’s a better way?

The final piece: git commit’s --fixup flag. Rather than manually adding a message, we can use this flag and pass a commit reference or SHA, and git will autogenerate the correct commit message for us:

$ git add -p test_bar.rb # add fix to old test

$ git commit --fixup=@~1

$ git rebase -i @~2This will give us the same text in the interactive rebase editor as above. Once again, all you need to do is save out.

1. Unless you have a rebase conflict somewhere.

Making your commits clean and simple

When I first started using git, I quickly learned about committing with -a (--all). A flag that would let me spend less time in the git CLI, amazing![1] I would add, edit, and delete code all over the place, git commit -am "some bad commit message", and that was it! I was soon using -a for every single commit.

I learned the cons of abusing -a when I started working on larger projects with a more storied past, and had to resort to git blame to try and understand the logic behind any particular WTF that I encountered. I would sometimes quickly find the answer to my initial incomprehension in the blamed commit, but all too often I would only be left with more questions. This was primarily because these commits were too complex. They were doing more than one thing, which meant that the commit message did not help, and that parsing the commit diff was tough and time-consuming.

I recently stumbled upon one such monster commit[2] while investigating a WTF, which prompted me to write this post. There is a better way to commit your code!

The first obvious step is to not use -a, and instead add files individually. That helps to avoid committing completely separate changes in different files together, but what about when you have separate changes in the same file? That’s where the -p (--patch) option saves the day!

Let’s use an example to illustrate the rest of this post:

While fixing a bug in the function foo defined in bar.py, we notice that one of the functions called by foo has a mispelled name. Following the Boy Scout Rule, we decide to fix the typo and rename the method, which is being called all over bar.py.

We go to commit our changes:

$ git add bar.py

$ git commit -m "Fix bug in foo"Now, when someone else goes to blame bar.py, they will see “Fix bug in foo” all over the place. And when they git show to see what the bug was, they will have to scroll through a lot of renames before finally finding what they came to see.

Let’s say we’d used -p instead:

$ git add -p bar.py-p allows us to stage individual hunks rather than entire files. When adding files with the flag toggled, we enter git’s interactive mode, where we are asked, hunk by hunk, whether we want to stage the displayed changes. We can now stage all of the renaming-related hunks (replying y for these), and leave out the bugfix for its own commit (replying n for those).

$ git commit -m "Rename mispelled function"

$ git add -p bar.py

$ git commit -m "Fix bug in foo"git blame now tells a truer story, and our bugfix commit is as simple as it gets.

Using -p can also help you catch a forgotten debugger statement or anything else that you might overlook when blindly git adding or nonchalantly scrolling through a git diff wall of text.

If a particular hunk contains changes you want to stage and others you don’t, you can try splitting it by replying s. If that doesn’t work, you can manually edit a hunk by replying e. If you accidentally stage a hunk that you had intended not to, you’ll be delighted to learn that git reset also takes in -p, which works in the exact same manner as it does for git add.

Coupled with git rebase -i, this will make you git history clean and simple: one commit does one thing. It makes pull request reviewing much smoother, and code archeology much saner.

1. This is “new to git, scared of the CLI”-me talking. I love the CLI, it’s an amazing tool, despite being scary for neophytes like past-me.

2. For the curious, this commit had 944 additions and 1,007 deletions spread over 10 files. The commit message? “huge speed improvements, plus big code cleanup”.

Hello World!

This weekend, I decided to finally join the GitHub Pages with Jekyll party. It’s as easy to set up as you’ve heard. Just follow GitHub’s instructions and you’ll be good to go. The only step that’s missing is fairly straightforward: creating a new Jekyll site, after installing Jekyll, but before running bundle exec jekyll serve.

It’s just one line: jekyll new .

If you’ve followed GitHub’s instructions though, you may run into this error:

Conflict: /Users/paul/mysite exists and is not empty.

Fortunately, all you need to do is move everything out, re-run the command, and move everything back in.

mysite$ mkdir ~/tmp

mysite$ mv * ~/tmp

mysite$ jekyll new .

mysite$ mv ~/tmp/* .

mysite$ rmdir ~/tmpThis is a just a Hello World! post, the next should be more technical than a primer on mkdir and mv :)

subscribe via RSS